A (continued) conversation about feminismFeminism's branding issue is limiting its allies.

A (continued) conversation about feminismFeminism's branding issue is limiting its allies.

Would You Let A Car Do The Driving For You?

Autonomous cars are coming, the question is are we ready?

I bet travelling by horse would have been great. The cathartic sound of their shoes on the ground bouncing off the outer walls of a public house filling the street. Romantic right? Well they had more tangible benefits too: They would have been great because a Jackaroo could wash the taste of 19th century hardship out of his mouth with whiskey all evening, before stumbling out of the pub to his horse, getting on and start mumbling some racist tune, and before he knows it he is home, ready to pass out somewhere not very comfortable.

Then the car became common place. And what a relief. No more literal shit smell or literal one horsepower. For the few decades nobody cared about drink driving, or any safety whatsoever. People sat on what amounted to a couch in their vehicles without seatbelts, safety glass or airbags, and without breathalysers possibly around any busy corner. But those days died as quickly as poor Jimmy Dean.

Responsibility is a strange thing. Some humans seem to yearn for more responsibility and control, to shape their future and to be their own master. However the same folk look to reducing personal liability in any way, shape, or form on paper, distancing themselves from both financial and physical harm, handing the responsibility of their books to someone else, their schedule to someone else, and their insurance to someone else.

Automated vehicles are coming. And their inevitable arrival presents us with a new wave of issues yet to be even discussed publicly, let alone decided. Our responsibility behind the wheel is changing. The landscape of travel will be vastly different than what it has been for the last 100 years. Yes, we will be returning to the magical time when stumbling from pub to transport to home will be a breeze, in the same way it was with a horse, but our liability and responsibility as ‘users’ of automated cars is yet to be determined.

If a drunkard steps into an autonomous car and programs it to go home, but something or someone is hit along the way who will be to blame? Can the manufacturer's systems be at fault? Should the user have remained sober? What is the point of an automated car if you can’t let go of some responsibility?

If we are attached to the vehicle liability wise, as in, what happens on the journey is our responsibility, then is it really worth the risk of taking our hands off the wheel? If I was unsure about the car’s system, or if I was responsible for the car’s actions, I would find it hard to give up control. Would you? Control over our actions is our own personal safety measure. It allows us to use our judgement in tough situations.

The next chapter of technology is no longer governed by what we can do, it is governed by what we should or should not do.

In autonomous vehicle development circles there is a theoretical problem yet to be solved. It does not concern a technical limitation, it concerns a moral one. A thought experiment. This problem is called the ‘tunnel conundrum’.

The tunnel conundrum forces programmers to consider the vehicle’s parameters on human life. A tall ask.

There is a little girl playing in the mouth of a tunnel. The tunnel mouth is on the edge of a mountain road, around a tight corner with little visibility. The speed limit is high: this is not an area for pedestrians. Don’t question the probability of the scene, it is not intended to be everyday life, it exists in the world of parameter setting, it is just numbers, but we can paint a picture with probabilities and in this unlikely scenario a choice by someone designing the automated car’s brain must be made.

The automated car comes barrelling around the corner. The car recognises the human life of the child and starts measuring everything to decide what to do next. The speed is too high to come to a stop. Veering left causes the car to hit the side of the mountain, killing the occupant. Veering right ends the same way: dead rider. Slamming on the brake will not work, not enough road, the child will be hit. What should the car do in this situation?

Does it decided who has a higher chance of surviving? The child or the passenger? Kill the other? Does age have any weight in the decision? Say if the passenger is 80 years old, should that make way for the child to live?

What if there is a changeable driver setting? Would you choose your own life at all costs? Car saves the driver, kills the child. Would the person who set this setting have the blood on their hands? Criminal charges? But has the car ultimately made the decision. Hell, can cars even make decisions?

It really couldn’t be greyer. We are entering the complete unknown.

The tunnel scenario is meant to illustrate the sheer scale of ethical and moral issues we as humans will soon be handing over to machines, along with the handing over of the controls. It is these issues that have raised grave fears with the people who have created a world where this is all possible, people like Bill Gates.

Yes our lives will likely be made easier with automated vehicles, but initially at least, there is going to be a lot of growing pains. And it’s these growing pains that may have significant impacts on our personal lives. How would we feel if it was our own mother in the automated car and the car’s computer decided that there was a 5% higher chance that the person driving another vehicle will live in the impending and unavoidable wreck?

Cold calculation is a tough pill for hopeful humans to swallow. Hope in the face of adversary has spawned huge leaps in our development throughout history.

Whether you’re an unemployed philosophy major, wondering how Aristotle and Descartes are going to help pay your rent, or if you’re anyone else, we all still generally appreciate that giving up some control comes at a cost. I cannot help but wonder what the human cost truly is for letting a fleet of computer controlled metal boxes full of human lives travelling at 60kmh move around our roads really is.

Of course I am all for the convenience and accessibility, in the same way technology like Facebook was so happily content with giving us a ‘free’ useful service. But that shit turned out scary as hell. It all costs something. And autonomous vehicles is going to cost personal control over a huge portion of our lives.

A (continued) conversation about feminismFeminism's branding issue is limiting its allies.

A (continued) conversation about feminismFeminism's branding issue is limiting its allies.

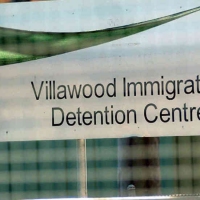

A Visit To Villawood Part 1"If you want to learn something about absolutely anything, go to the primary sources first."

A Visit To Villawood Part 1"If you want to learn something about absolutely anything, go to the primary sources first."